Upload files to Fabric OneLake with a Service Principal and Python

I've had the unfortunate pleasure of working with Microsoft Fabric lately. This is one way of uploading a file with python to Fabric storage module AKA OneLake and a Service Principal.

In Azure

- Create a new App:

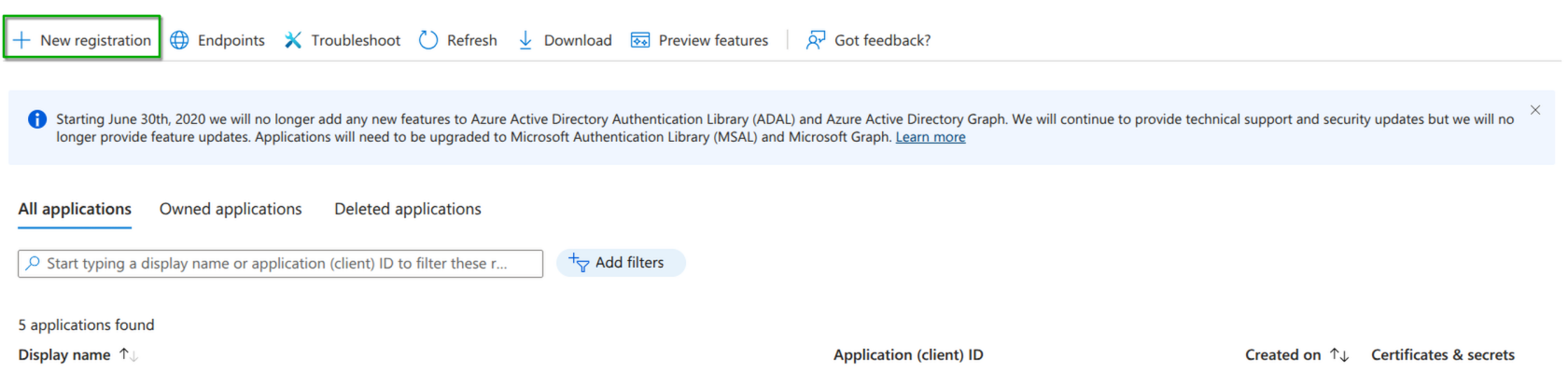

- Go to App registrations and complete the process after having clicked New registration

- The name field largely won't matter and generally Accounts in this organizational directory only should be sufficient. The redirect URI isn't necessary.

- Go to App registrations and complete the process after having clicked New registration

- Open the newly created App by going back to App registrations and selecting All applications

- In the Overview tab copy: Display name, Application (client) ID and Directory (tenant) ID

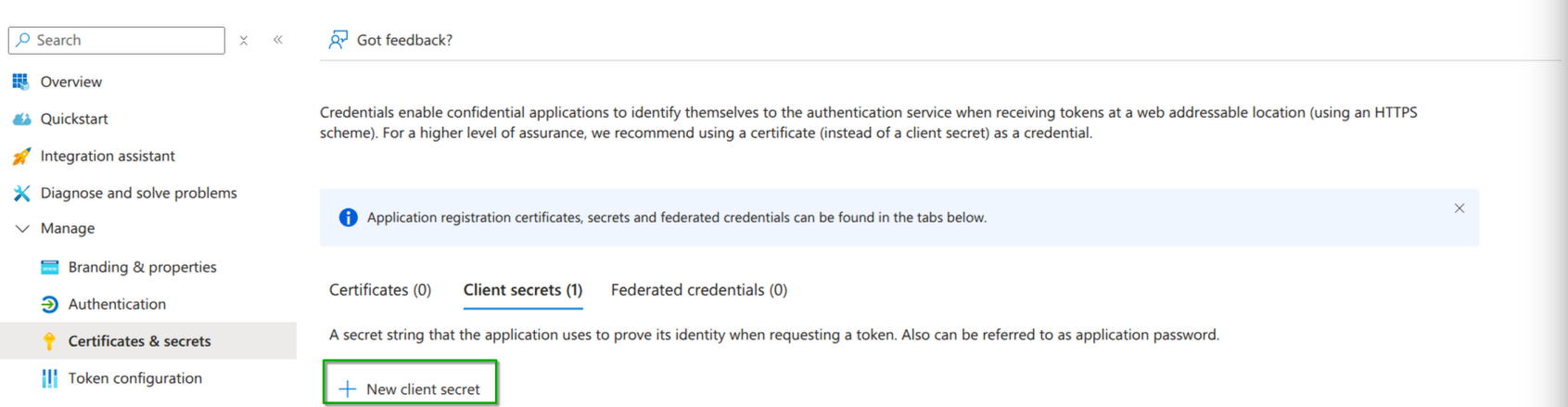

- Open the Certificates & secrets page under Manage

- Create a new client secret by selecting Client secrets and New client secret. Choose the appropriate name and expiration date for your use-case.

- Copy and save the secret value

In Fabric

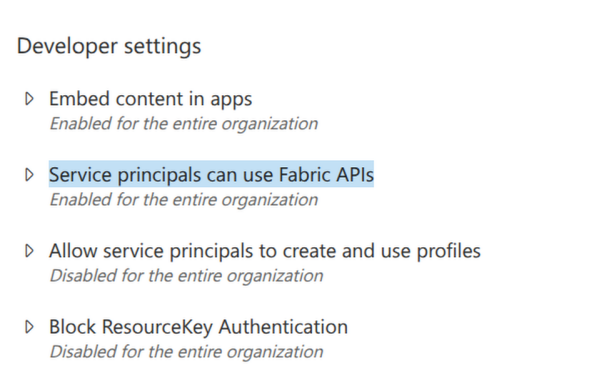

- Open the Tenant settings in Admin Portal: https://app.fabric.microsoft.com/admin-portal/tenantSettings

- Scroll down to Developer settings and enable the option: Service principals can use Fabric APIs.

In Python

Install libraries with the following command:

pip install azure-identity azure-storage-file-datalake

Create a new json called credentials.json with the following content:

{

"tenant_id": "YOUR-TENANT-ID",

"client_id": "YOUR-CLIENT-ID",

"client_secret": "YOUR-CLIENT-SECRET-VALUE"

}

Create a new script in the same folder as your credentials.json file, with the following content:

import json

from azure.identity import ClientSecretCredential

from azure.storage.filedatalake import DataLakeServiceClient

config = json.load(open("./credentials.json"))

credential = ClientSecretCredential(

tenant_id=config.get("tenant_id"), client_id=config.get("client_id"), client_secret=config.get("client_secret")

)

workspace = "YOUR-FABRIC-WORKPACE-NAME"

lakehouse = "YOUR-FABRIC-LAKEHOUSE-STORAGE-ENDPOINT-NAME"

file_directory = "NAME-OF-THE-FOLDER-TO-UPLOAD-TO"

onelake_file_name = "NAME-OF-THE-FILE-IN-ONELAKE"

filesystem_file_path = "PATH-OF-THE-FILE-IN-CURRENT-FILESYSTEM"

service_client = DataLakeServiceClient(account_url="https://onelake.dfs.fabric.microsoft.com/", credential=credential)

file_system_client = service_client.get_file_system_client(file_system=workspace)

directory_client = file_system_client.get_directory_client(f'{lakehouse}.Lakehouse/Files/{file_directory}/')

file_client = directory_client.get_file_client(onelake_file_name)

with open(filesystem_file_path, "rb") as file:

file_client.upload_data(file) # Add the option 'overwrite=True' if needed

Enjoy!

For more info about Microsoft Entra service principals: https://learn.microsoft.com/en-us/entra/identity-platform/howto-create-service-principal-portal